Notice: There is no legacy documentation available for this item, so you are seeing the current documentation.

Check out our video on using All in One SEO on WordPress Multisite Networks here.

If you have a WordPress Multisite Network, you can manage global robots.txt rules that will be applied to all sites in the network.

IMPORTANT:

This feature currently only works for WordPress Multisite Networks that are set up as subdomains. It doesn’t work for those set up as subdirectories.

In This Article

Tutorial Video

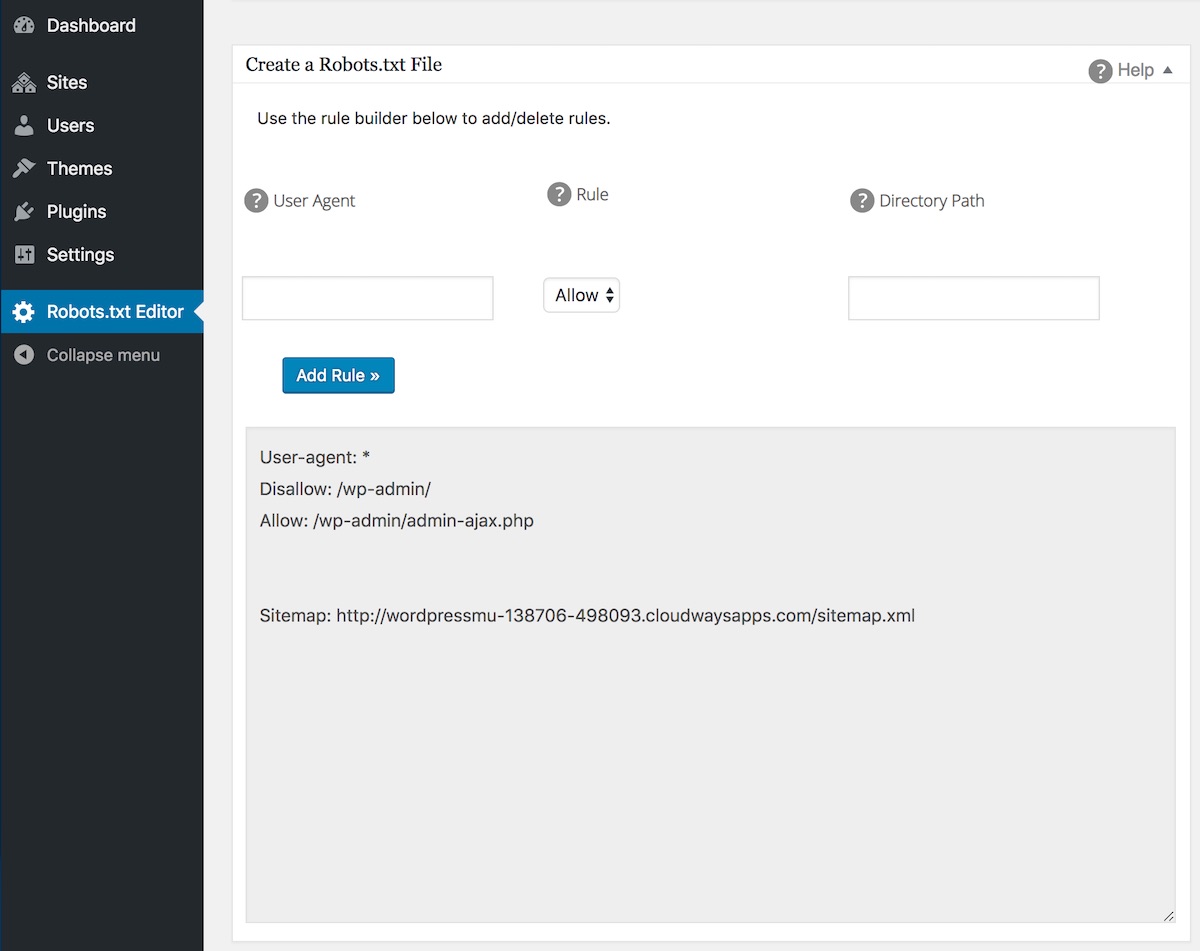

To get started, go to the Network Admin and then click on All in One SEO > Network Tools in the left hand menu.

You will see our standard Robots.txt Editor where you can enable and manage custom rules for your robots.txt.

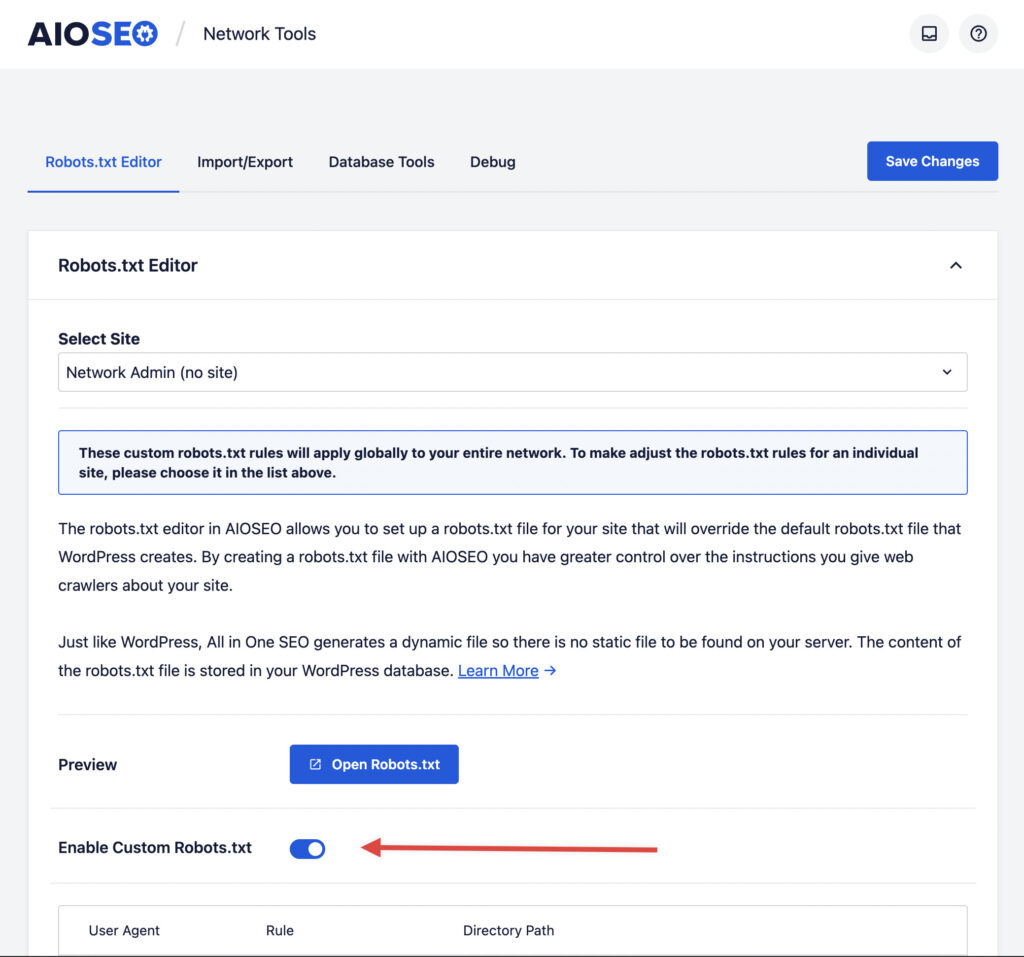

Click on the Enable Custom Robots.txt toggle to enable the rule editor.

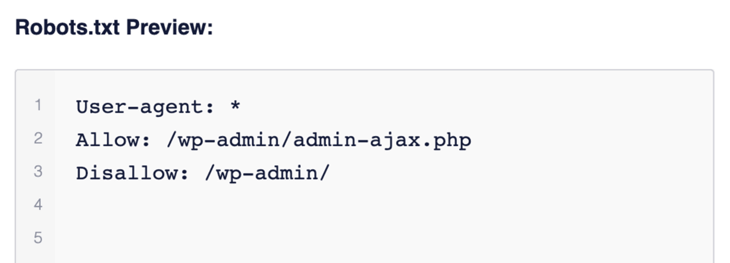

You should see the Robots.txt Preview section at the bottom of the screen which shows the default rules added by WordPress.

Default Robots.txt Rules in WordPress

The default rules that show in the Robots.txt Preview section (shown in screenshot above) ask robots not to crawl your core WordPress files. It’s unnecessary for search engines to access these files directly because they don’t contain any relevant site content.

If for some reason you want to remove the default rules that are added by WordPress then you’ll need to use the robots_txt filter hook in WordPress.

Adding Rules Using the Rule Builder

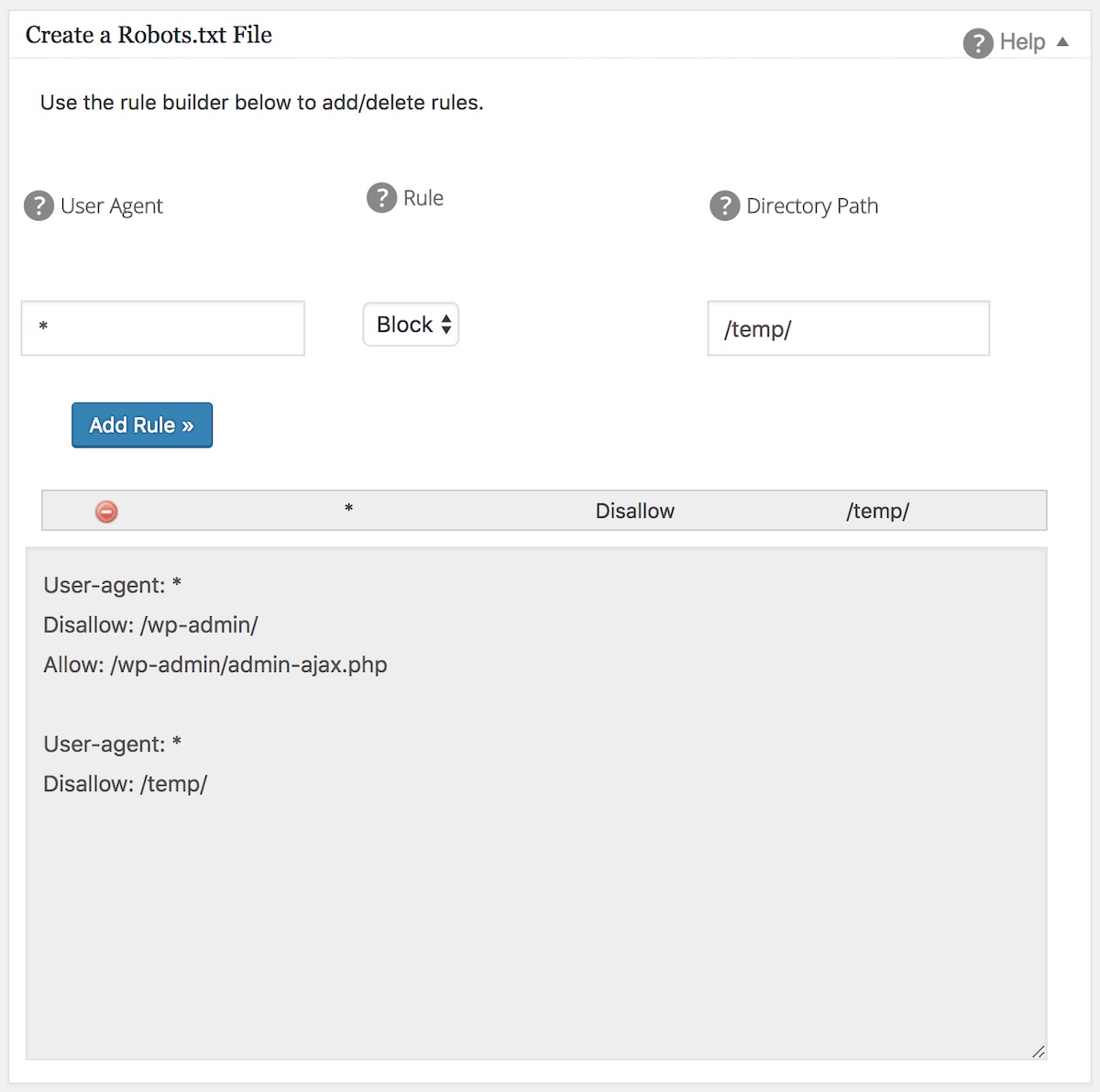

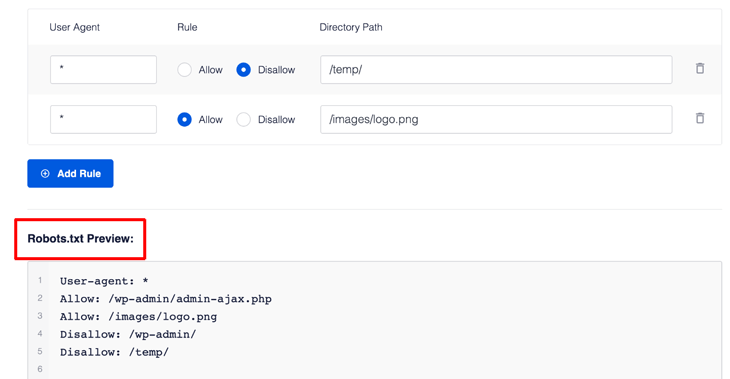

The rule builder is used to add your own custom rules for specific paths on your site.

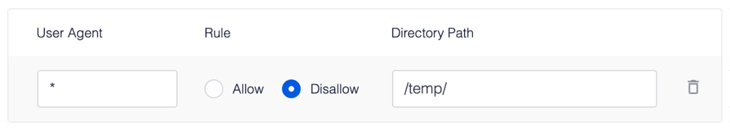

For example, if you would like to add a rule to block all robots from a temp directory then you can use the rule builder to add this.

To add a rule, enter the user agent in the User Agent field. Using * will apply the rule to all user agents.

Next, select either Allow or Disallow to allow or block the user agent.

Next, enter the directory path or filename in the Directory Path field.

Finally, click the Save Changes button.

If you want to add more rules, then click the Add Rule button and repeat the steps above and click the Save Changes button.

Your rules will appear in the Robots.txt Preview section and in your robots.txt which you can view by clicking the Open Robots.txt button.

Any rule you add here will apply to all sites in your network and cannot be overridden at the individual site level.

Editing Rules Using the Rule Builder

To edit any rule you’ve added, just change the details in the rule builder and click the Save Changes button.

Deleting a Rule in the Rule Builder

To delete a rule you’ve added, click the trash can icon to the right of the rule.

Managing Robots.txt for Subsites

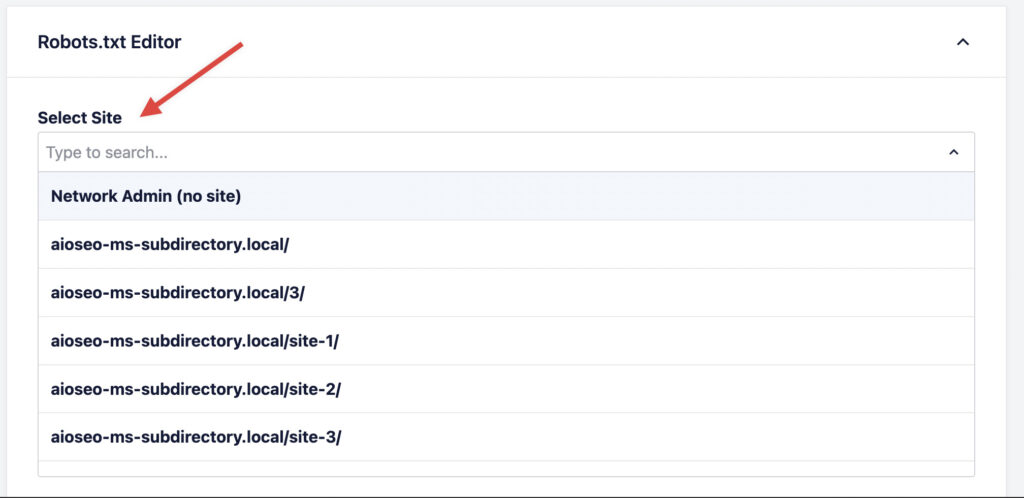

In the multisite Robots.txt Editor, it’s very easy to edit robots rules for any site on your network.

To get started, simply click on the site selector dropdown from the top of the Robots.txt Editor. From there select the site you wish to edit the rules for, or search for it by typing in the domain:

Once you’ve selected a site, the rules below will automatically refresh and you can modify/change the rules for just that site. Make sure to click Save Changes after modifying the rules for the subsite.