Would you like to increase the chances of your content being properly crawled and indexed?

Then you’ll be as excited as we are about our latest plugin update!

We’ve listened to your feedback and added more power and functionality to our robots.txt editor.

In This Article

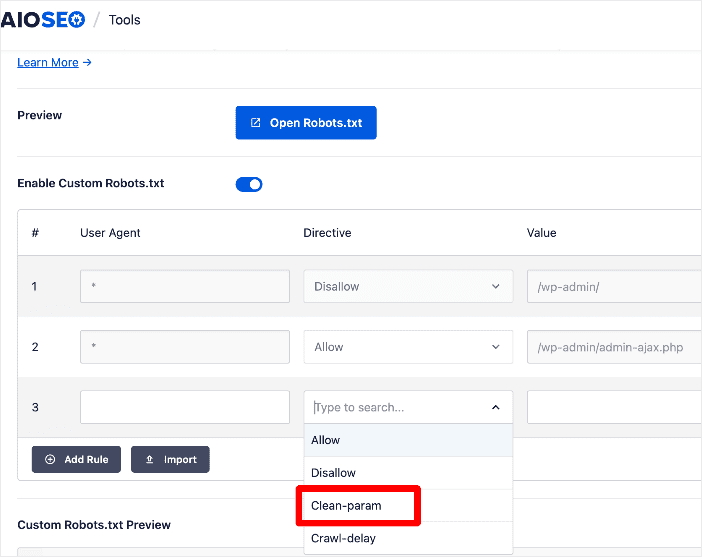

Clean-param: Reduce Unnecessary Crawls

One of the new crawl directives we’ve added to our robots.txt editor is the Clean-param directive.

This powerful directive instructs search bots to ignore URL parameters like GET parameters (such as session IDs) and tags (UTMs). These can result in many problems for your SEO, including:

- Duplicate content issues

- Wasted crawl budget

- Indexation issues

These factors result in search engines taking longer to reach and crawl important pages on your site.

The Clean-param is a powerful feature that gives you more granular control of how search engines crawl your site. As a result, you increase the chances of the right pages ranking on search engines.

Note: Clean-param is currently only supported by Yandex.

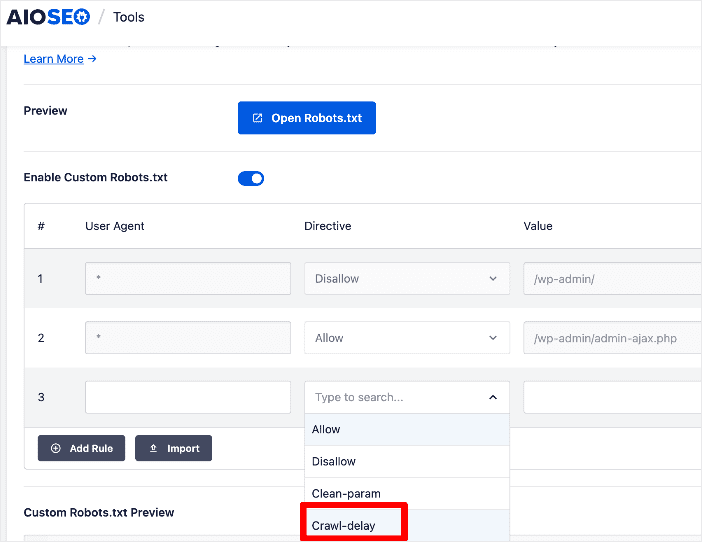

Crawl-delay: Regulate the Frequency Of Crawling

Another new directive in our robots.txt editor is the Crawl-delay directive.

Crawl-delay is a directive used in the robots.txt file to instruct search engine bots on the rate at which they should access and crawl your site. This directive is particularly useful when you want to control the frequency of bot visits to avoid overloading your server, especially if you have limited server resources or high traffic. This can impact the performance of your website or, in some extreme cases, make it inaccessible.

Adding the crawl delay directive can help you avoid overloading your server during times you anticipate a lot of traffic.

Note: Crawl-delay is only supported by Bing, Yahoo, and Yandex.

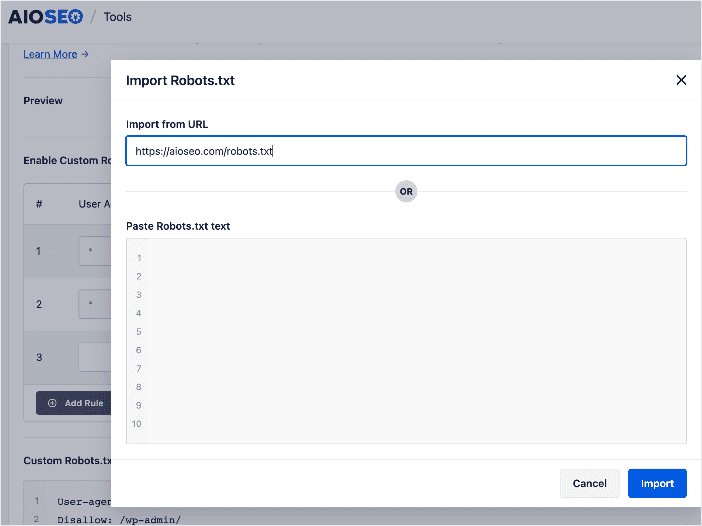

Import Robots.txt Rules From Any Site

If you're setting up a new website and want to implement a basic robots.txt file, you can save time and effort by simply using an existing site's rules as a starting point.

To help you do this, we’ve added an Import function to our robots.txt editor.

You can use this function to import a robots.txt file from a site by inputting the URL or by visiting the site and then copying and pasting the robots.txt file.

If you're working on a website similar to another one, you might find that they have implemented useful rules that are applicable to your site as well. This can save you time in crafting your own rules. It can also help you avoid making mistakes if you’re still new to editing robots.txt files.

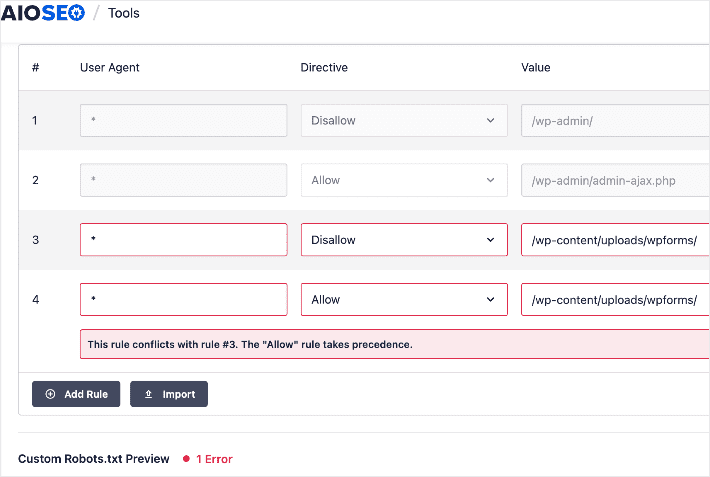

Improved Rule Validation and Error Handling

Besides the powerful directives and import functionality, we’ve also improved the rule validation and error handling in our robots.txt editor. This helps you quickly spot conflicting rules or other errors in your robots.txt.

You can easily delete the erroneous rule by clicking on the trash can icon next to it.

With these latest additions to our plugin, improving your crawlability and indexability has just become easier. As a result, your site and content stand a better chance of ranking high on search engine results pages.

We’ve also added additional advanced functionality in our robots.txt editor. Check out this documentation for more details.

Besides this significant update, we’ve made several notable improvements to many features you love. You can see all our changes in our full product changelog.

What are you waiting for?

Update your site to AIOSEO 4.4.4 to unlock these powerful new features and rank your content higher on SERPs.

And if you’re not yet using AIOSEO, make sure to install and activate the plugin today.

If you have questions about these features, please comment below or contact our customer support team. We’re always ready to help. Our commitment to making AIOSEO the easiest and best WordPress SEO plugin is only getting stronger as we continue to win together with our customers.

We’re so grateful for your continued support, feedback, and suggestions. Please keep those suggestions and feature requests coming!

We hope you enjoy using these new SEO features as much as we enjoyed creating them.

-Benjamin Rojas (President of AIOSEO).

Disclosure: Our content is reader-supported. This means if you click on some of our links, then we may earn a commission. We only recommend products that we believe will add value to our readers.

Still could be easier for mid level technical knowledge. I still after 2 years don’t totally know if I use all the functions/correctly.

Thanks for making a post on this topic. Clients always ask how crawling can be optimized!

Cheers to good content 🙂

What settings should we use for best seo?