A robots.txt editor is a tool that provides a user-friendly interface for creating, modifying, and managing the robots.txt file of a website. These editors simplify the process of editing the robots.txt file, making it more accessible to users who may not be familiar with the syntax or structure of the file.

Reasons why user-friendly robots.txt editors make it easier to edit robots.txt files:

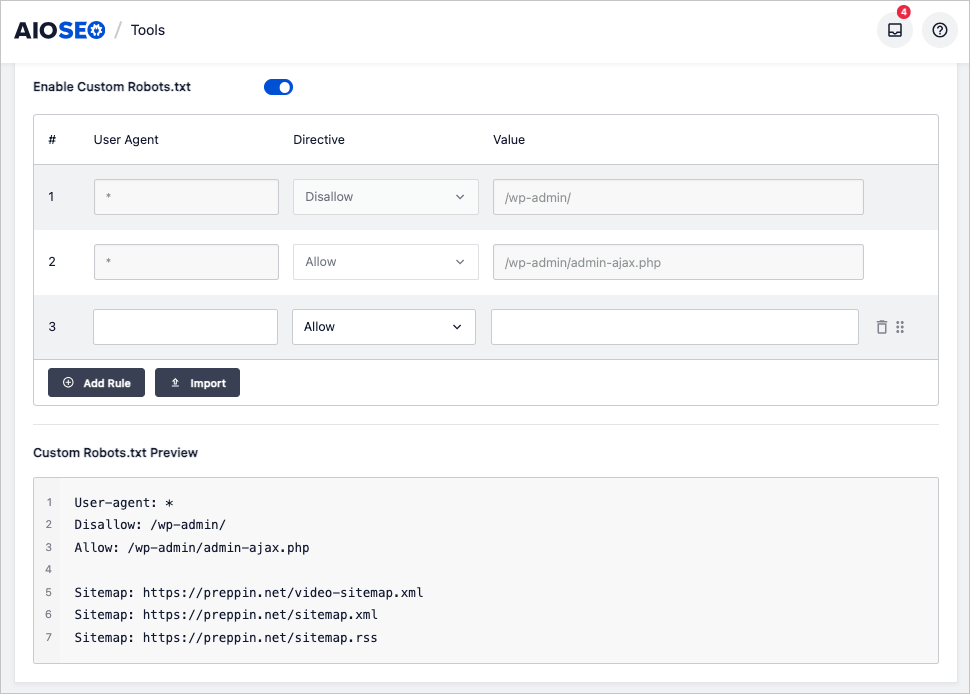

- Visual interface: Editors often provide a visual, intuitive interface that allows users to create and modify rules without directly dealing with the syntax of the robots.txt file.

- Syntax validation: User-friendly editors can validate the syntax of the robots.txt file in real-time, helping users avoid common mistakes and ensuring that the file is properly formatted.

- Rule generation: Some editors provide pre-built rule templates or wizards that guide users through the process of creating rules for common scenarios, such as disallowing specific directories or file types.

- Preview and testing: Advanced editors may offer features like previewing how the robots.txt file will affect search engine crawlers or testing the file against different user-agents to ensure it works as intended.

- Collaboration and version control: Some editors integrate with version control systems or provide collaboration features, making it easier for teams to work together on maintaining the robots.txt file.

By using a user-friendly robots.txt editor, website owners and developers can save time, reduce errors, and ensure that their robots.txt file is properly configured to manage search engine crawlers' access to their site.

A Robots.txt Editor for WordPress

The All in One SEO (AIOSEO) plugin includes a user-friendly robots.txt editor. The plugin will automatically generate a robots.txt file and include a link to your sitemap in it.

Text fields are available to edit the file. Learn more in The Ultimate Guide to WordPress Robots.txt Files.