Would you like to know how to generate robots.txt files in WordPress?

When I first started managing WordPress sites, I had no idea what a robots.txt file was. My SEO rankings were inconsistent, and I couldn't figure out why search engines seemed to be indexing random pages while missing my important content.

Then I discovered that WordPress doesn't automatically create an optimized robots.txt file. Many small business owners face this same frustrating problem. Search engines crawl your site inefficiently, wasting your crawl budget on unimportant pages.

After managing dozens of WordPress sites over the years, I've learned that a properly configured robots.txt file is crucial for SEO success. It tells search engines exactly which pages to focus on and which ones to skip.

The good news? You don't need any coding skills to create and optimize your robots.txt file in WordPress. There are simple, user-friendly tools that handle everything for you.

In this guide, I'll show you exactly how to generate and customize your robots.txt file using no-code solutions. You'll learn which pages to block, which to prioritize, and how to avoid common mistakes that could hurt your SEO rankings.

In This Article

What is a Robots.txt File

Robots.txt is a text file that website owners can create to tell search engine bots how to interact with their sites, mainly which pages to crawl and which to ignore. In essence, that acts as your site’s gatekeeper and is stored in the root directory (also known as the main folder) of your website.

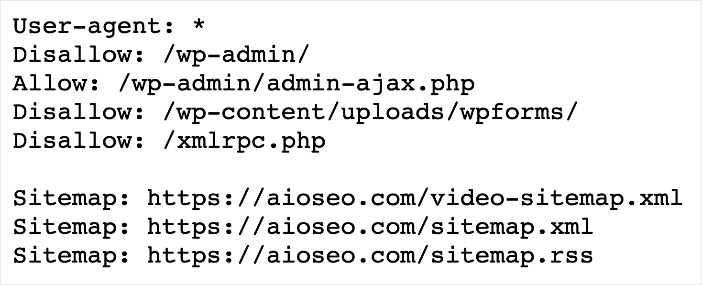

This is what it looks like:

You can easily access your robots.txt file by visiting https://yoursite.com/robots.txt.

This should not be confused with robots meta tags, which are HTML tags embedded in the head of a webpage. The tag only applies to the page it’s on.

Importance of a Robots.txt File

Here are some things you can use a robots.txt file for:

- Control crawling

- Improve indexing

- Prevent duplicate content issues

- Protect sensitive information

- Optimizes crawl budget

- Enhances site performance

Despite being a simple text file, having an optimized robots.txt file can significantly impact your SEO.

How to Generate a Robots.txt File In WordPress

Ready to generate robots.txt files?

Note: WordPress automatically generates a robots.txt file for your site. But usually, the default file may need to be optimized.

In the steps below, I'll show you how to generate a custom robots.txt file. Don’t worry. No code or technical knowledge is needed.

Step 1: Install AIOSEO

The first step to generating custom robots.txt files is to install and activate All In One SEO (AIOSEO).

AIOSEO is a powerful yet easy-to-use SEO plugin that boasts over 3 million active installs. Millions of smart bloggers use AIOSEO to help them boost their search engine rankings and drive qualified traffic to their blogs. The plugin has many powerful features and modules to help you properly configure your SEO settings. Examples include:

- Cornerstone Content: Helps you build topical authority and enhances your semantic SEO.

- Search Statistics: This powerful Google Search Console integration lets you track your keyword rankings and see important SEO metrics with 1-click, and more.

- Advanced Robots.txt Generator: Easily generate and customize your robots.txt file for better crawling and indexing.

- Keyword Rank Tracker: Keep track of your keyword performance on Google right inside your WordPress dashboard.

- AI Writing Assistant: Identify relevant keywords, improve readability, and optimize your content for search engines, ensuring it ranks higher in search results.

- Next-gen Schema Generator: This no-code schema generator enables users to generate and output any schema markup on your site.

- Redirection Manager: Helps you manage redirects and eliminate 404 errors, making it easier for search engines to crawl and index your site.

- Link Assistant: Powerful internal linking tool that automates building links between pages on your site. It also gives you an audit of outbound links.

- Sitemap Generator: Automatically generate different types of sitemaps to notify all search engines of any updates on your site.

- And more

For step-by-step instructions on how to install AIOSEO, check out our installation guide.

And one of the most loved features is our advanced robots.txt editor. This powerful tool makes it easy to customize your robots.txt file to suit your needs. Plus, it also automatically adds your sitemap URLs to the robots.txt file!

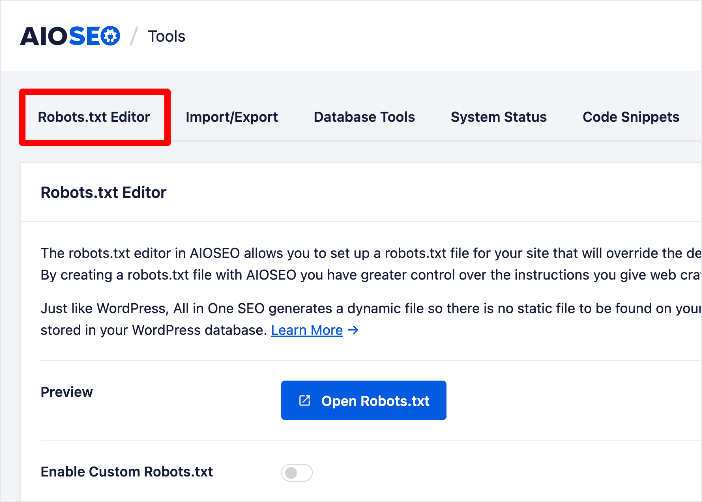

Step 2: Access the Robots.txt Editor

Once you’ve activated AIOSEO, the next step is to access AIOSEO’s Robots.txt Editor. To do that, go to your AIOSEO menu and click Tools » Robots.txt Editor.

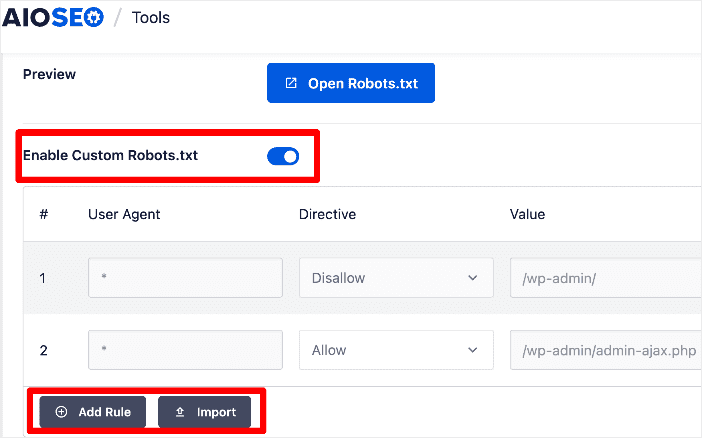

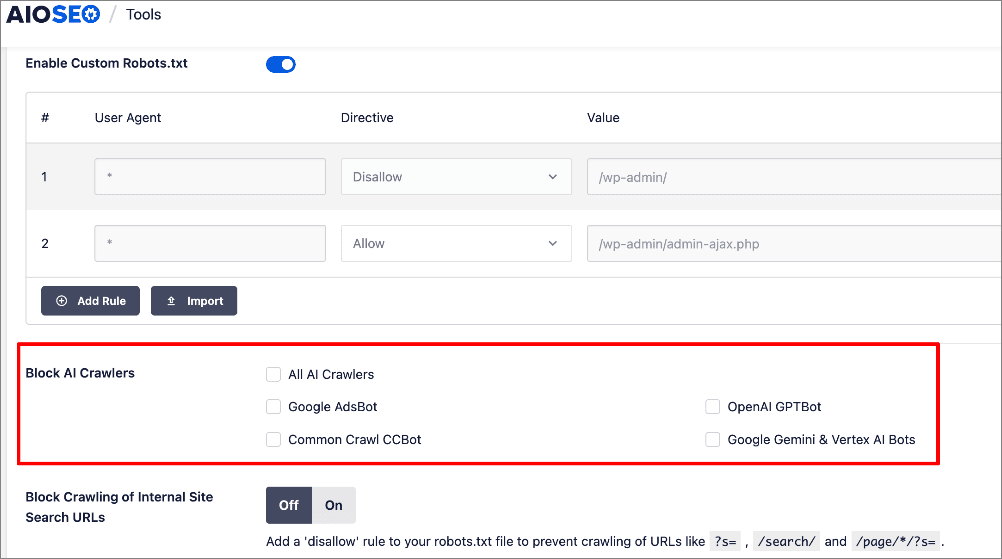

Next, click on the Enable Custom Robots.txt toggle. Doing so enables you to edit your robots.txt file.

Check out our list of the best robots.txt generators for more robots.txt tools.

Step 3: Add Robots.txt Directives

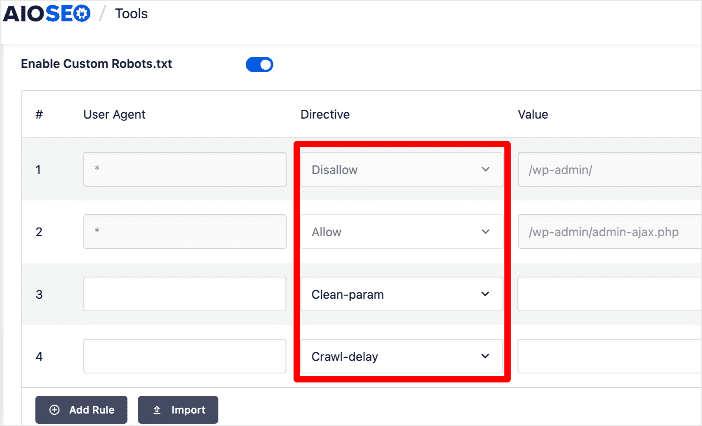

Once you’ve enabled editing of your robots.txt files, the next step is to add your custom directives.

These are the instructions you want search bots to follow. Examples include Allow, Disallow, Clean-param, and Crawl delay.

Here’s what each directive means:

- Allow: Allows user agents (bots) to crawl the URL.

- Disallow: Disallows user agents (bots) to crawl the URL.

- Clean-param: Tells search bots to ignore all URLs with the stipulated URL parameters.

- Crawl-delay: Sets the intervals for search bots to crawl the URL.

In this section, you can also determine which user agents should follow the directive. You’ll also have to stipulate the value (URL, URL parameters, or frequency) of the directive.

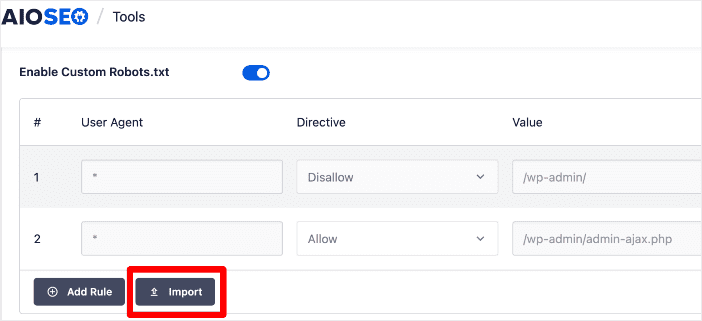

Step 4: Import Robots.txt Directives from Another Site (Optional)

A simpler way to generate robots.txt files in WordPress is to use the Import function.

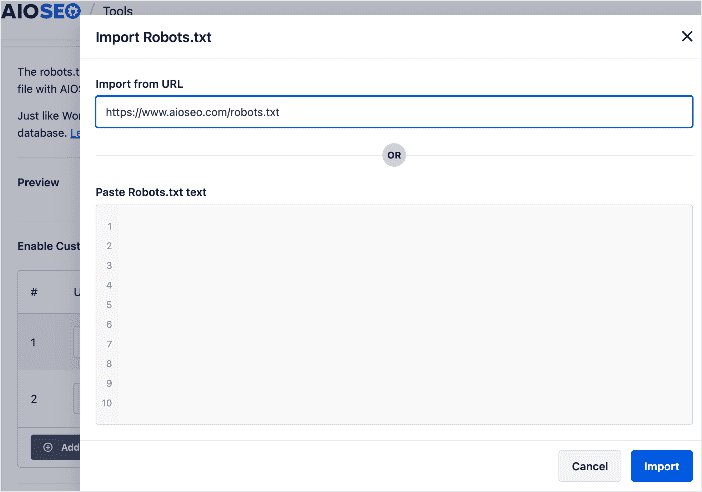

Clicking the Import button will open a window with 2 options for importing your chosen robots.txt file:

Import from URL: Simply paste the URL of the robots.txt file you want to import (https://examplesite.com/robots.txt) and click Import. The robots.txt file will be imported to your site.

Paste Robots.txt text: Open the URL with the robots.txt file you want to import and copy the text. Next, go back to your site, paste it into the provided field, and click Import.

This method is best if the site you’re importing the robots.txt file from has directives you would like to implement on your site.

Step 5. Block Unwanted Bots (Optional)

Another super cool feature in AIOSEO's Robots.txt Editor is the Block AI Crawlers section. This allows you to block unauthorized bots from crawling your site. These can lead to performance, security, and SEO issues.

For more on this, check out our tutorial on blocking unwanted bot traffic on your site.

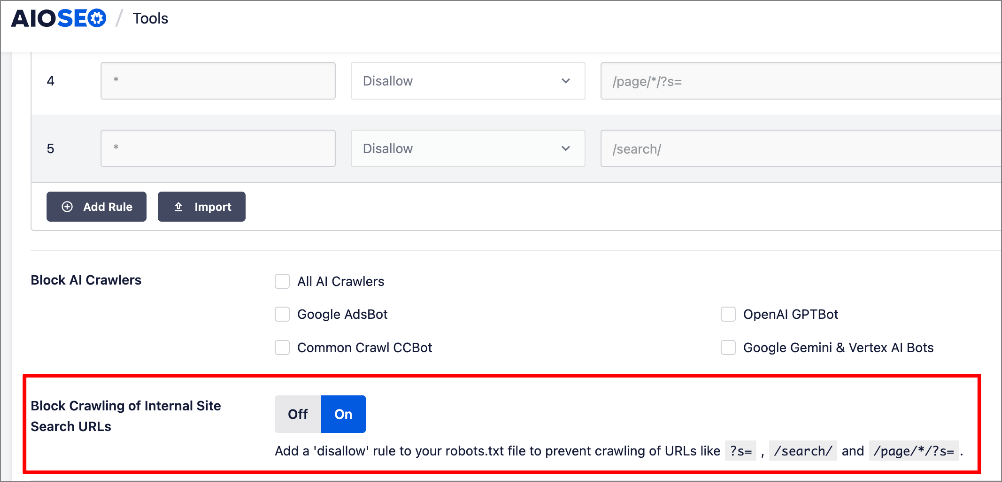

Step 6. Prevent Crawling of Internal Site Search URLs (Optional)

Websites often produce excess URLs when visitors use internal search features to find content or products. These dynamically generated URLs typically include parameters that search engines treat as unique pages. As a result, search engines may waste time crawling these unnecessary URLs, which can deplete your crawl budget and potentially hinder the indexing of your most important pages.

You can easily prevent this in AIOSEO's Robots.txt Editor.

AIOSEO adds a “disallow” rule in your robots.txt file without you having to do so for every search-generated parameter.

For more information, check out our tutorial on editing your robots.txt file.

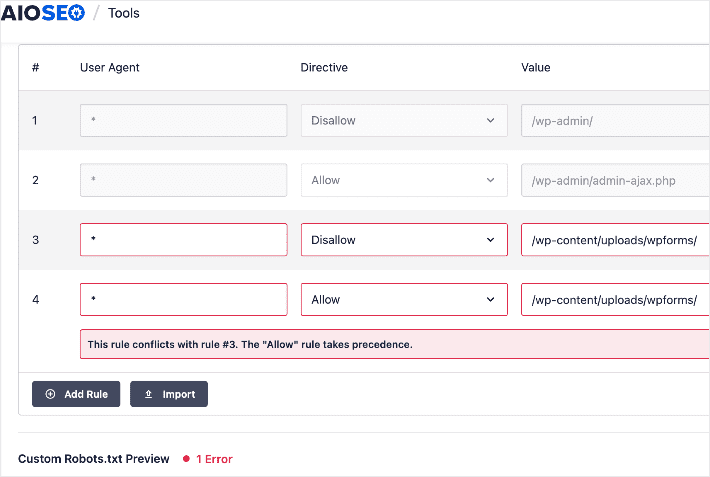

Step 7: Test Your Robots.txt File

Once you’re done generating your robots.txt file, you can test it for any errors. However, this is very rare if you use AIOSEO’s robots.txt editor, as it has built-in rule validation and error handling.

However, if you want to double-check that there are no errors in your robots.txt file, you can use Google Search Console’s robots.txt testing tool. Simply enter your property, and the tool will automatically pull your robots.txt file and highlight any errors.

How to Generate a Robots.txt File In WordPress: Your FAQs Answered

What is the purpose of a robots.txt file?

A robots.txt file provides instructions to search engine crawlers about which parts of your website they are allowed to access and index. It helps control how search engines interact with your site's content.

How can I generate robots.txt in WordPress?

You can easily generate a robots.txt file in WordPress using a plugin like All In One SEO (AIOSEO). You don’t even need coding or technical knowledge.

Can I manually create a robots.txt file for my WordPress site?

Yes, you can manually create a robots.txt file using a text editor and place it in your site's root directory. This takes longer than using a plugin like AIOSEO and requires advanced WordPress knowledge.

We hope this post helped you know what robots.txt in WordPress is and why it’s important to optimize yours. You may also want to check out other articles on our blog, like our guide on crawling and indexing and its impact on SEO or our tips on improving your indexing on Google.

If you found this article helpful, then please subscribe to our YouTube Channel. You’ll find many more helpful tutorials there. You can also follow us on Twitter, LinkedIn, or Facebook to stay in the loop.

Disclosure: Our content is reader-supported. This means if you click on some of our links, then we may earn a commission. We only recommend products that we believe will add value to our readers.