What is crawling and indexing in SEO?

If you’re asking that question, you’re not the only one.

Many SEO terms are loosely thrown around in articles and discussions without being properly explained.

Crawling and indexing are excellent examples of this. Knowing what these terms mean will help you better understand SEO and how to optimize your site and content for improved search rankings.

So, what is crawling in SEO?

In this article, we’ll answer that question, as well as look at what indexing is.

In This Article

What is Crawling in SEO?

Crawling is the process search engine bots (also known as search spiders, crawlers, or Googlebot) use to systematically browse the internet to discover and access web pages. These bots start from a list of known web addresses (URLs) and then follow links from one page to another, effectively creating a vast interconnected network of web pages.

As the search bots crawl your site, they access your posts and pages, read the content, and follow the internal and external links on those pages. They continue this process recursively, navigating from one link to another until they have crawled a substantial portion of your site.

For small websites, this can be done on all the URLs. For large sites, however, search bots will only crawl the pages if they don’t exhaust the crawl budget.

Search engines use the data collected during crawling to understand the structure of websites, the content they contain, and how different web pages are related to each other. The information obtained from crawling is then used for the next step: indexing.

What is Indexing in SEO?

Once the crawlers have found and fetched your web pages, the next step in the process is indexing. Indexing involves analyzing and storing the information collected during the crawling process. The gathered data is organized and added to Google’s index (or any other search engine), a massive database containing information about all the web pages the search engine has discovered.

Search engines use complex algorithms to evaluate and categorize the content found on each page during indexing. Factors like keywords, page structure, meta tags, and overall relevance are considered during this process.

Indexing is important because it enables search engines to quickly retrieve relevant results when users perform a search query. These indexed results are then displayed on search engine results pages (SERPs).

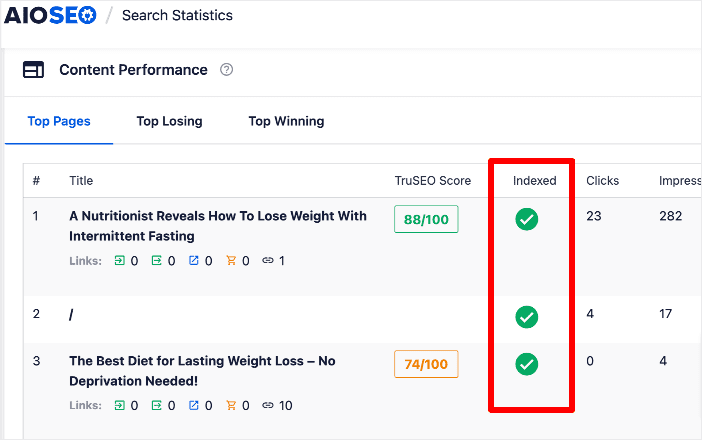

You can easily use AIOSEO to check the index status of your posts and pages.

This will help you easily keep tabs on the index status of your pages so you can easily troubleshoot those that are unindexed.

It’s important to note that not all web pages get indexed, and search engines prioritize pages based on their perceived importance, authority, and relevance. Web pages that are inaccessible due to technical issues or are deemed low-quality may not be indexed.

Why are Crawling and Indexing Important for SEO?

Crawling and indexing are fundamental aspects of SEO. They are crucial in determining how well your website is ranked and how visible it is to search engines like Google.

That’s why you must ensure your site can easily be crawled and indexed.

This is easy with a powerful WordPress SEO plugin like All In One SEO (AIOSEO).

AIOSEO is the best WordPress SEO plugin on the market, boasting over 3 million active installs. Millions of savvy website owners and marketers trust the plugin to help them dominate SERPs and drive relevant site traffic.

It also has many powerful SEO features and modules to help you optimize your website for crawlability and indexability.

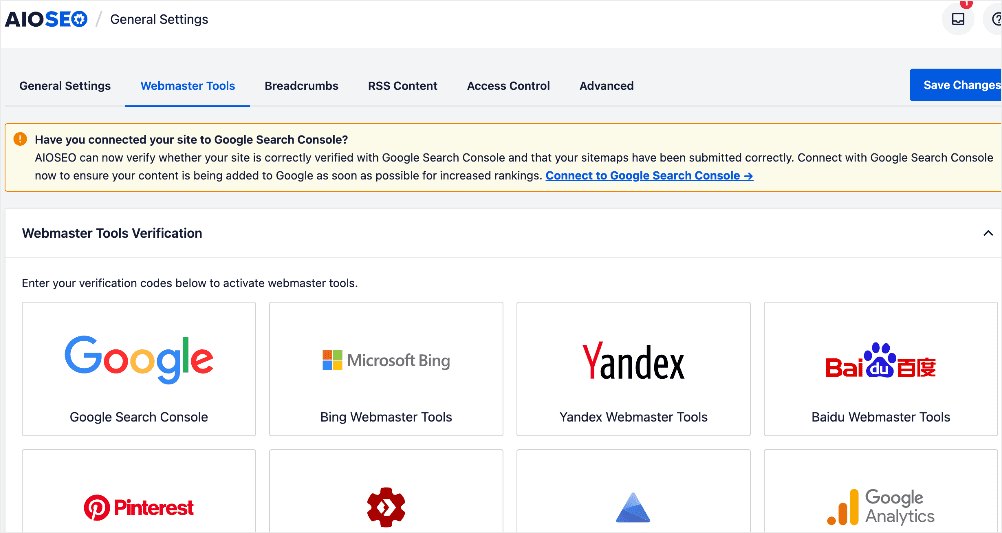

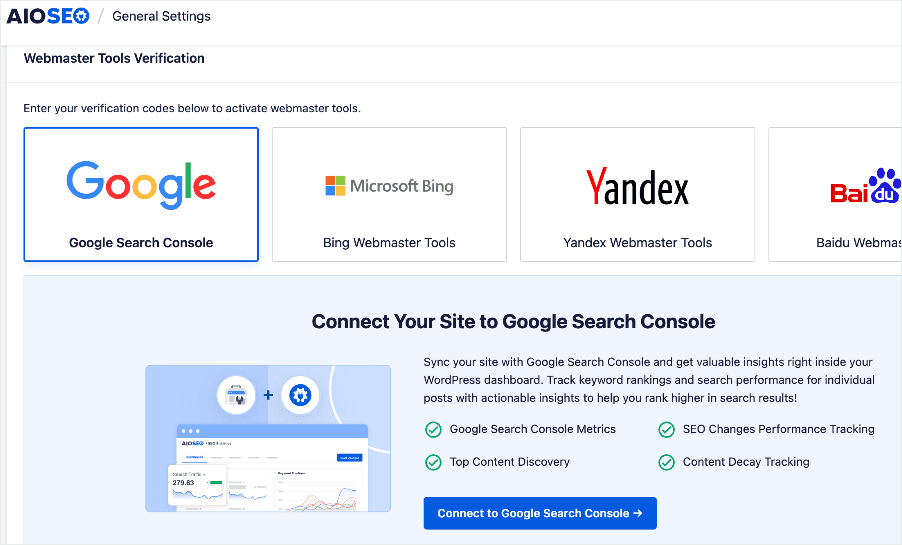

Regarding indexing, one of the most loved features is the Webmaster Tools section.

This function lets you connect your site to various platforms, including Google Analytics and Google Search Console (GSC). As for GSC, you don’t have to go through the tedious process of copying and pasting verification codes. Connecting your site to GSC helps you submit your sitemaps and content to the search engine for indexing.

Click on the Connect to Google Search Console button to start the connection wizard. The first step requires you to select the Google account you want to use to connect to GSC.

For detailed instructions on using this feature, check out our tutorial on connecting your site to Google Search Console.

For step-by-step instructions on how to install AIOSEO, check our detailed installation guide.

Why Should You Optimize Your Site for Crawling and Indexing?

Let’s delve deeper into why you should optimize your site for search bots to crawl and index your site.

Increased Discoverability

When search engines crawl and index your site, your web pages have a better chance of being discovered by users. If a page is not crawled and indexed, it won’t appear in search engine results, making it practically invisible to potential visitors and users. Proper crawling ensures that search engines can find and index your website’s content, allowing it to appear in relevant search queries.

Better Search Rankings

After a web page is indexed, it becomes eligible to appear on SERPs. However, just being indexed doesn’t guarantee a good ranking. Search engines use complex algorithms to determine the relevance and authority of web pages in relation to specific search queries.

The more accessible and understandable your content is to search engine crawlers, the higher the likelihood of ranking well for relevant searches. Additionally, proper indexing ensures that all your website’s pages are considered for ranking, increasing your chances of ranking higher on SERPs.

Freshness and Updates

Most websites frequently add new content, update existing pages, or remove outdated ones. Without proper crawling and indexing, search engines won’t be aware of the changes and updates you make on your site.

Timely crawling and indexing ensure that search engines stay up-to-date with your website’s latest content. This enables them to reflect the most current and relevant information in search results. Fresh and regularly updated content can also positively impact your SEO, as search engines often prioritize new and relevant information.

9 Tips to Improve Your Site’s Crawlability and Indexability

Now you know what crawling and indexing are in SEO. Next, let’s look at how you can help search engines better crawl and index your site and its content.

1. Start with Site Architecture

One of the first places to start when optimizing your site for crawling and indexing is your site architecture. This means organizing your posts and pages in a way that’s easy for search engines and readers to navigate. This includes:

- Optimize URL structure: Organize your content into a clear and logical hierarchy. Divide your content into categories and subcategories, making it easy for search engines to follow and understand what each URL is about.

- Clear navigation: Aim for a flat navigation structure, where each page can be reached within a few clicks from the homepage. This ensures that important pages are easily accessible to users and search engine crawlers.

- Descriptive URLs: Use descriptive and user-friendly URLs that include relevant keywords. Avoid long strings of numbers or symbols that don’t provide any context to users or search engines.

Check out this guide for more details on the best permalink structure in WordPress.

2. Optimize Your Robots.txt File

A robots.txt file controls the crawling behavior of search engine bots and other web crawlers on your website. It serves as a set of instructions telling search bots which pages or parts of your website they are allowed or disallowed to crawl and index.

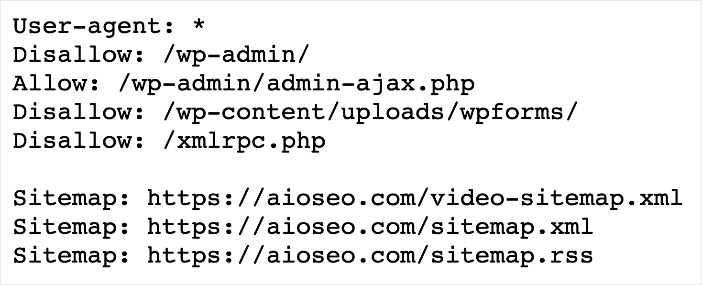

Here’s an example of a robots.txt file:

The most important benefit of optimizing your robots.txt file is that it improves crawl efficiency. That’s because it tells search bots which URLs to prioritize as they crawl your site. It also gives directives on which pages they shouldn’t crawl. This way, they spend more time on your more important pages, increasing their chances of being properly indexed and served to users.

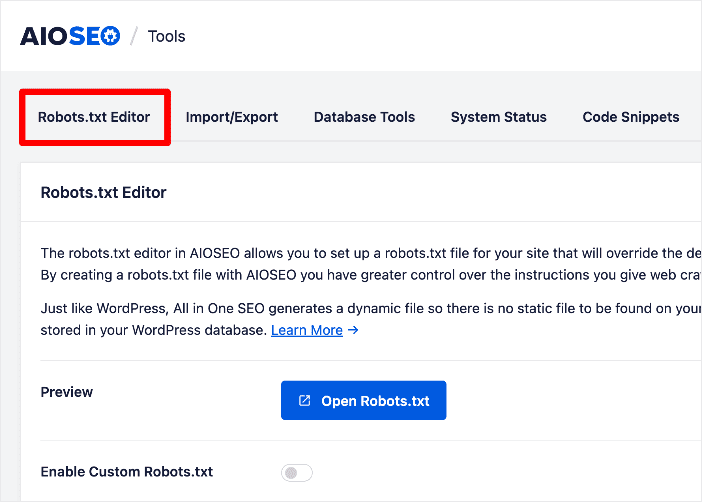

While this may sound highly technical, it’s super easy to do with AIOSEO’s robots.txt editor. You can access this in the tools section of the plugin.

All you need to do to customize your robots.txt file is click Enable Custom Robots.txt. You then get 4 directives you can apply on your site, namely:

- Allow

- Disallow

- Clean-param

- Crawl-delay

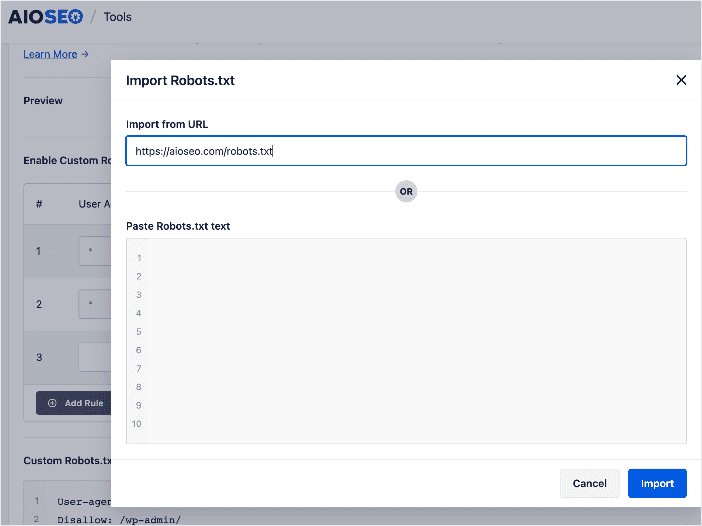

And if the site whose robots.txt file you’re editing is similar to another, you can easily import the latter’s robots.txt file via the URL or copying and pasting it.

A well-optimized robots.txt file ensures that your priority pages are crawled first, increasing the chances of being indexed and served to users for relevant keywords.

For tips on editing your robots.txt file, check out this article.

3. Don’t Forget Your XML Sitemaps

Another way to improve your site’s crawlability is by optimizing your XML sitemap.

An XML sitemap is a file that lists all the important pages and URLs on your website in an XML format. It serves as a roadmap for search engine crawlers to discover and index the content on your site more effectively.

Creating and optimizing an XMl sitemap is easy with AIOSEO’s sitemap generator.

Some of the benefits of creating one include:

- Informs search engines about your content: An XML sitemap carries information about each page of your site, including when it was created, when it was last modified, and its importance relative to other pages on your site.

- Helps search engines discover your new pages: It tells search engines about your new pages and when you’ve published a new blog post on your site.

- Increased page and crawl priority: It changes the relative priority of pages on your website. For example, you can add a tag on your sitemap saying which pages are the most important, and bots will focus on crawling these priority pages.

- List your website’s URLs: You can submit a list of all URLs for your website. This is beneficial because it makes it easier for search engines to discover your important URLs.

Once you’ve created your sitemap, submit it to Google through Google Search Console (GSC). You can also submit it to Bing and other search engines.

Check out this tutorial for step-by-step instructions on creating an XML sitemap.

4. Implement Schema Markup

Schema markup is a semantic language that helps search engines better understand your content. It involves using tags (structured data) that give search engines more context about your pages or posts.

Adding schema markup to your pages has many advantages, including:

- Improves indexing

- Boosts rankings

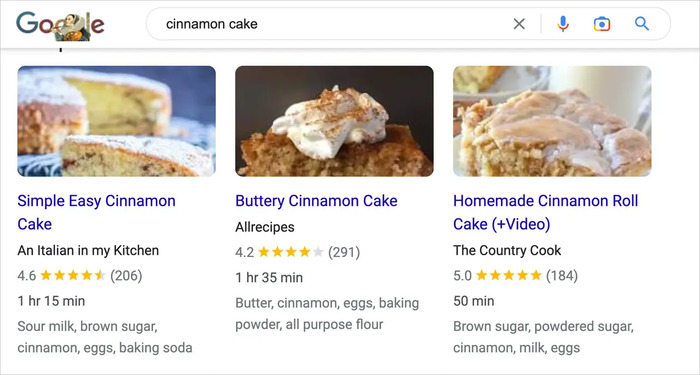

- Can result in rich snippets

- Improves organic clickthrough rates (CTR)

Pages with schema markup can enjoy a boost in visibility thanks to more interactive search listings.

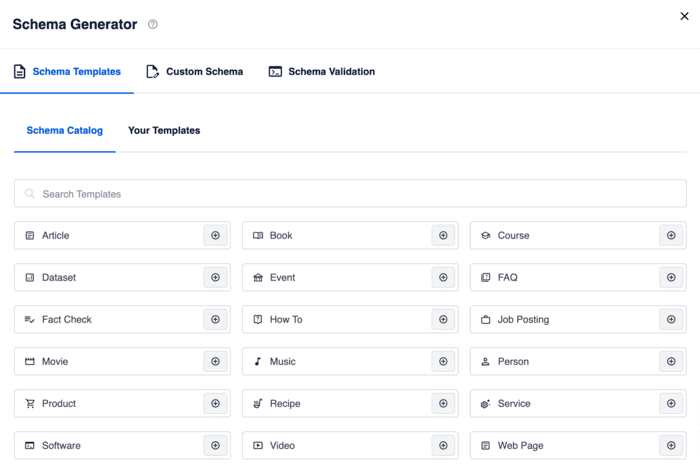

Implementing schema markup is easy with AIOSEO’s next-gen Schema Generator.

You don’t even need any coding or technical skills to add schema to your website. All it takes are a few clicks, and you’re done. You can get detailed instructions for doing so in this tutorial.

5. Utilize Breadcrumbs

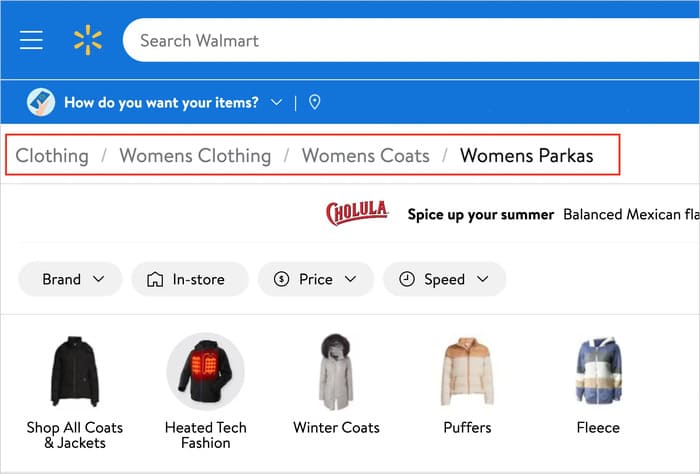

Breadcrumbs are tiny navigational links that provide users with a clear and hierarchical navigation trail. Search engine bots can also follow your breadcrumbs to navigate through your site more efficiently.

Breadcrumbs offer a structured navigation system by displaying the hierarchical path from the homepage to the current page. This structure helps search engine bots understand the organization of your website’s content and its relationships. As a result, it’s easier for them to crawl and index your pages effectively.

To learn more about breadcrumbs and adding them to your site, check out this tutorial.

6. Leverage IndexNow

The IndexNow protocol makes the internet more efficient by instantly alerting participating search engines of any changes you make on your website. For example, if you change your content or add a new post or page, the IndexNow protocol will ping search engines about those changes.

Doing so will result in the search engines crawling and indexing your changes faster.

This means IndexNow is a powerful tool to help you get your content indexed faster, resulting in better chances of ranking.

The IndexNow protocol is a powerful tool that can help you get your content indexed fast.

Another significant advantage of IndexNow is that if one search engine participating in the IndexNow initiative is pinged, all other search engines will be alerted when new content is published. As a result, your content will quickly appear in search results for relevant searches.

The best part is that adding IndexNow to your site is easy. Just follow these simple steps.

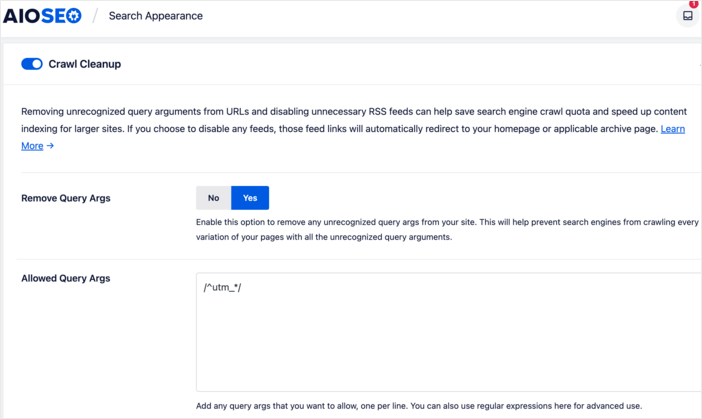

7. Use Crawl Cleanup to Eliminate Unnecessary Query Args

As your website grows and evolves, it inevitably builds a repository of URLs that don’t contribute to your SEO. If anything, some of the URLs may be harmful to your SEO as they waste crawl budget. Examples are URLs with query args. Query args, or URL parameters, are any part of a web address after a question mark (?) and ampersand (&).

Consider the example below:

https://www.example.com/search?q=robots&category=tech&page=1In this case, the query args is q=robots&category=tech&page=1

The problem with query args is that they result in multiple URLs for the same page. As a result, search engines will crawl the same page many times because query args mislead them into thinking they are different pages.

To avoid this wastage in crawl budget, you can use AIOSEO’s Crawl Cleanup feature to strip query args from your URLs.

Don’t worry. This powerful tool allows you to define which URLs to clean up.

Check out this tutorial for more details on how Crawl Cleanup works.

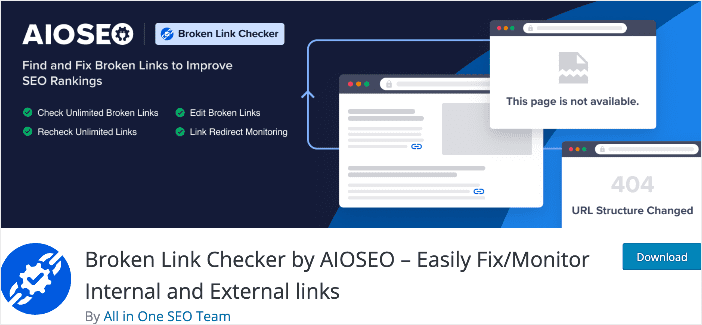

8. Find and Fix Broken Links

Broken links are bad for SEO as they result in crawl errors. This is because broken links disrupt the normal flow of the crawling process. Instead of efficiently following links from one page to another, the crawler encounters dead ends. This may result in wasted crawling resources and potentially leaving other pages unexplored.

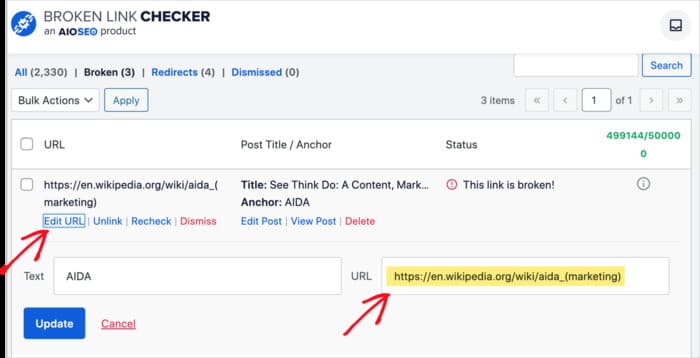

That’s why you should regularly monitor your site for broken links. This can be easily done with AIOSEO’s Broken Link Checker, a tool that crawls your site to find broken links.

This powerful tool does more than find broken links. It also offers you solutions for fixing them.

Because Broken Link Checker automatically checks your site for broken links, you reduce the occurrence of crawl errors.

For detailed instructions on using this powerful tool to find and fix broken links, check out this tutorial.

9. Be Strategic with Internal Linking

Another way to optimize your site for crawlability and therefore indexation is to be strategic about your internal link building. One way to do this is by creating a logical internal linking structure that connects your pages. This helps search engines discover new content and understand the hierarchy and importance of your pages.

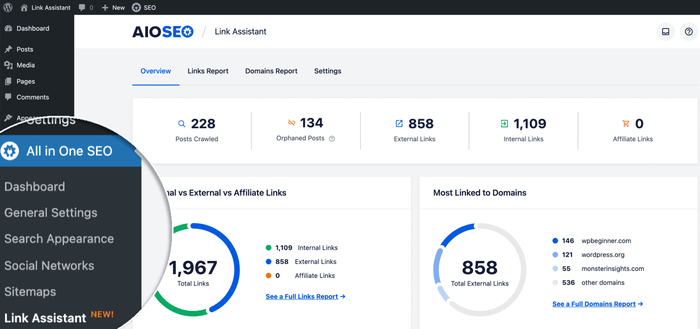

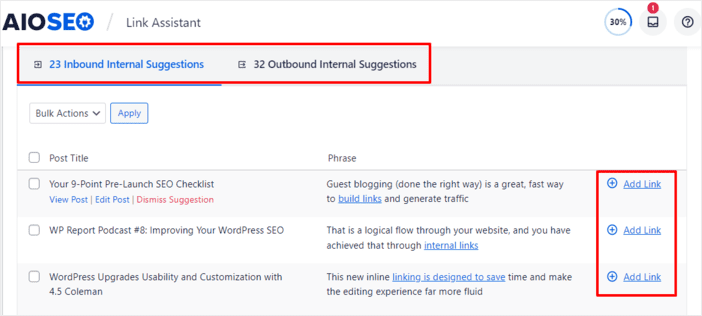

A great tool that can help you automate this is Link Assistant.

This is an AI-powered tool that crawls your site and finds related content that you can link together. The tool is so powerful it also offers anchor text suggestions.

Strategic internal linking also helps search engines understand the primary theme of your site. This makes it easier for them to properly index your pages and serve them for relevant search queries.

What is Crawling and Indexing in SEO?: Your FAQs Answered

What is the difference between crawling and indexing?

Crawling is the discovery process search engines use to find content on your site. Indexing is the process of logically storing that content in the search engine’s database.

What is the best tool for improving the crawling and indexation of my site?

All In One SEO (AIOSEO) is the best tool to help you optimize your site and content for crawling and indexing. It has many features designed to help make it easy for search engines to crawl, discover, and index your URLs.

What is the benefit of crawling in SEO?

Crawling makes it easier for search engines to discover your content and serve it to users for relevant search queries.

What is Crawling in SEO? Now That You Know, Optimize for It

Now that you know what crawling and indexing are and why they’re important, go ahead and optimize your site for search engines. Remember, with AIOSEO, you get a lot of features and tools to help you do that.

We hope this post helped you understand what crawling and indexing are and why they’re important for your SEO. You may also want to check out other articles on our blog, like our tutorial on using the primary category to customize breadcrumbs or our guide on how long SEO takes.

If you found this article helpful, then please subscribe to our YouTube Channel. You’ll find many more helpful tutorials there. You can also follow us on Twitter, LinkedIn, or Facebook to stay in the loop.

Disclosure: Our content is reader-supported. This means if you click on some of our links, then we may earn a commission. We only recommend products that we believe will add value to our readers.