What is robots.txt in WordPress?

Whenever you do a casual check for tips to improve your search engine rankings, chances are that the robots.txt file will pop up. That’s because it’s an important part of your site and should be considered if you want to improve your rankings on search engine results pages (SERPs).

In this article, we’ll answer the question, “What is robots.txt in WordPress.” We’ll also show you how to generate a robots.txt file for your site.

In This Article

What is Robots.txt?

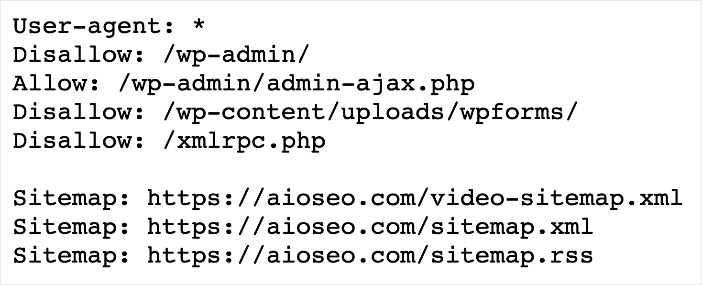

Robots.txt is a text file that website owners can create to tell search engine bots how to interact with their sites, mainly which pages to crawl and which to ignore. It’s a plain text file stored in your website's root directory (also known as the main folder). In our case, our robots.txt’s URL is:

https://aioseo.com/robots.txtAnd this is what it looks like:

This should not be confused with robots meta tags, which are HTML tags embedded in the head of a webpage. The tag only applies to the page it’s on.

What does this all mean?

To better understand this, let’s back up a bit and define what a robot is. In this context, robots are any “bot” or web crawler that visits websites on the Internet. These are called user agents in the robots.txt file. The most common ones are search engine crawlers, also called search bots or search engine spiders, among many other names. These bots crawl the web to help search engines discover your content and thus index it faster so they can serve it to users in response to relevant search terms.

In general, search bots are good for the Internet and your site. However, as useful as they are, there are instances where you may want to control how they interact with your site.

This is where the robots.txt file comes in.

What Does a Robots.txt File Do?

As already seen, a robots.txt file serves as a set of instructions to guide search engine bots and other web crawlers on how to interact with your website's content. It acts as a digital gatekeeper that influences how search engines perceive and interact with your website.

Here are some things you can use a robots.txt file for:

Control Crawling

Search engine bots crawl through websites to discover and index content. A robots.txt file informs these bots which parts of your website are open for crawling and which are off-limits. You can control which pages they can access by specifying rules for different user agents (bots).

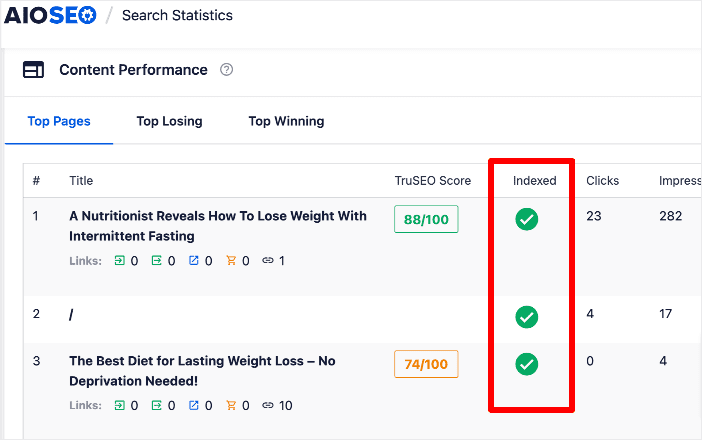

Direct Indexing

While crawling involves discovering content, indexing involves adding discovered content to the search engine's database. By utilizing a robots.txt file, you can guide bots on which content to index and which to exclude. This allows you to prioritize the indexing of important pages while preventing irrelevant content from cluttering search results.

You can easily use AIOSEO to check the index status of your posts and pages.

This will help you easily keep tabs on the index status of your pages.

Prevent Duplicate Content Issues

Duplicate content can confuse search engines and negatively impact your site's SEO. With robots.txt, you can prevent bots from crawling and indexing duplicate content, ensuring that only the most relevant version of your content is considered for search results.

Protect Sensitive Information

Some areas of your website might contain sensitive data you don't want to expose to the public or have indexed by search engines. A well-structured robots.txt file can prevent bots from accessing and indexing these areas, enhancing the privacy and security of your website.

Optimizes Crawl Budget

Search engines allocate a crawl budget to each website, determining how frequently and deeply bots crawl your site. Using robots.txt, you can guide bots toward your most valuable and relevant pages, ensuring efficient use of your crawl budget.

Enhances Site Performance

Allowing bots to crawl your site unrestrictedly can consume server resources and impact site performance. By controlling crawling with robots.txt, you can reduce the load on your server, leading to faster loading times and better user experiences.

How to Generate a Robots.txt File in WordPress

Generating a robots.txt file in WordPress is super easy. That’s especially true if you have a powerful WordPress SEO plugin like All In One SEO (AIOSEO).

AIOSEO is the best WordPress SEO plugin on the market, boasting over 3 million active installs. Millions of savvy website owners and marketers trust the plugin to help them dominate SERPs and drive relevant site traffic. That’s because the plugin has many powerful SEO features and modules to help you optimize your website for search engines and users. Examples of the features you’ll find in AIOSEO include:

These are features such as:

- Search Statistics: This powerful Google Search Console integration lets you track your keyword rankings and see important SEO metrics with 1-click, and more.

- Next-gen Schema generator: This is a no-code schema generator that enables users to generate and output any type of schema markup on your site.

- Redirection Manager: Helps you manage redirects and eliminate 404 errors, making it easier for search engines to crawl and index your site.

- Link Assistant: Powerful internal linking tool that automates building links between pages on your site. It also gives you an audit of outbound links.

- IndexNow: For fast indexing on search engines that support the IndexNow protocol (like Bing and Yandex).

- Sitemap generator: Automatically generate different types of sitemaps to notify all search engines of any updates on your site.

- And more.

For step-by-step instructions on how to install AIOSEO, check out our installation guide.

One of the original and most loved features in AIOSEO is the Robots.txt editor. This powerful module makes it easy to generate and edit robots.txt files, even without coding or technical knowledge.

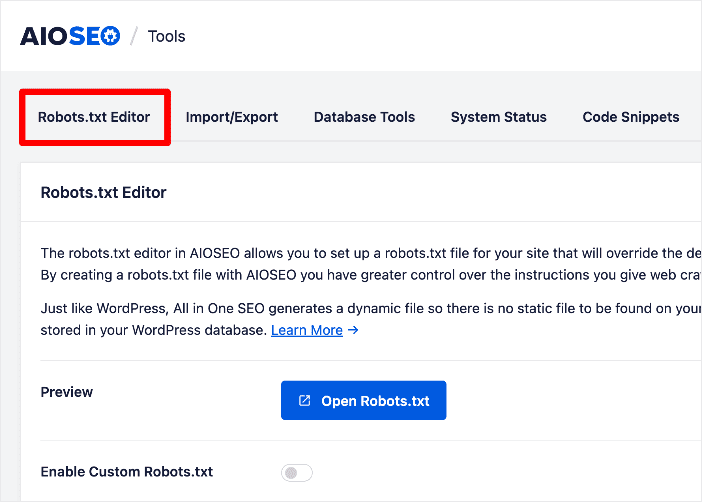

You can find the robots.txt editor by clicking Tools on your AIOSEO menu.

One of the first things you’ll notice is a blue Open Robots.txt button. This is because WordPress automatically generates a robots.txt file for you. But because it’s not optimized, you’ll want to edit it. Here are the steps you need to do just that:

Step 1: Enable custom robots.txt

You can do this by clicking on the Enable Custom Robots.txt button. This will open a window allowing you to edit your robots.txt file.

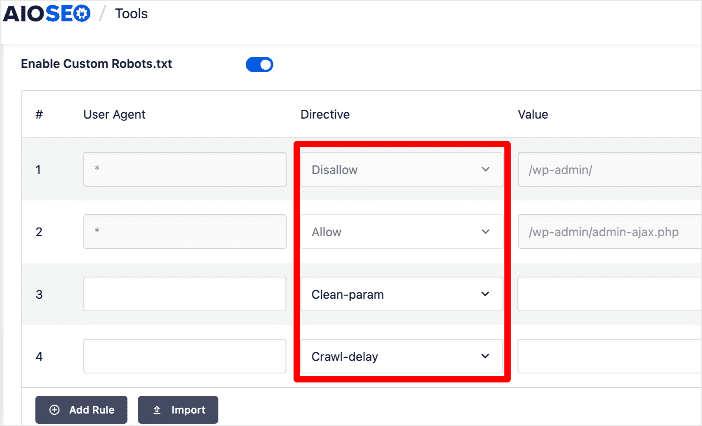

Step 2 Customize your Directives

These are the instructions you want search bots to follow. Examples include Allow, Disallow, Clean-param, and Crawl delay.

In this section, you can also determine which user agents (search crawlers) should follow the directive. You’ll also have to stipulate the value (URL, URL parameters, or frequency) of the directive.

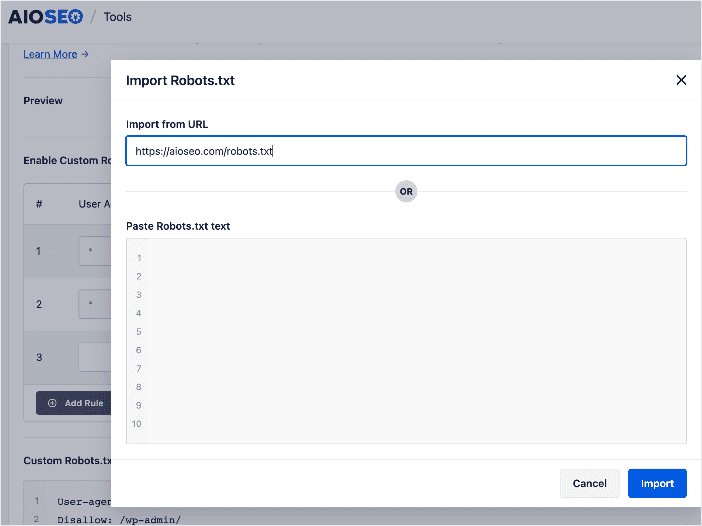

If there’s a site with a robots.txt file you want to emulate, you can use the Import button to copy it using a URL or the copy-and-paste function.

And that’s it!

Customizing your robots.txt file is that easy.

Alternatively, you can choose to import a robots.txt file from another site. To do this, click the Import button. Doing so will open a window where you can either import your file using a URL or copy and paste the desired file.

For step-by-step instructions on editing your robots.txt file, check out this tutorial.

What is Robots.txt? Your FAQs Answered

What is robots.txt?

A robots.txt file is a plain text file placed in the root directory of a website to provide instructions to search engine bots and other web crawlers about which parts of the site should be crawled or excluded from crawling. It acts as a set of guidelines for these bots to follow when interacting with the website's content.

How do I create a robots.txt file?

You can easily create a robots.txt file using a plugin like AIOSEO. Alternatively, you can take the longer route and create a plain text file named robots.txt and upload it to your site’s root directory.

Can I use robots.txt to improve SEO?

Yes, optimizing your robots.txt file can contribute to better SEO. By guiding search engine bots to crawl and index your most valuable content while excluding duplicate or irrelevant content, you can enhance your website's visibility and ranking in search results.

What is Robots.txt? Now that You Know, Optimize Yours

W

As you can see, a robots.txt file is a crucial part of any website. Optimizing yours ensures that your most important pages are crawled and indexed, leading to better search rankings.

So, go ahead and use AIOSEO’s robots.txt editor to optimize yours.

We hope this post helped you know what robots.txt in WordPress is and why it’s important to optimize yours. You may also want to check out other articles on our blog, like our guide on getting indexed on Google or our tips on using Search Statistics to boost your rankings.

If you found this article helpful, then please subscribe to our YouTube Channel. You’ll find many more helpful tutorials there. You can also follow us on Twitter, LinkedIn, or Facebook to stay in the loop.

Disclosure: Our content is reader-supported. This means if you click on some of our links, then we may earn a commission. We only recommend products that we believe will add value to our readers.